CrossGen: Learning and Generating Cross Fields for Quad Meshing

4Wayne State University 5Texas A&M University 6RWTH Aachen University

Abstract

Cross fields play a critical role in various geometry processing tasks, especially for quad mesh generation. Existing methods for cross field generation often struggle to balance computational efficiency with generation quality, using slow per-shape optimization. We introduce CrossGen, a novel framework that supports both feed-forward prediction and latent generative modeling of cross fields for quad meshing by unifying geometry and cross field representations within a joint latent space. Our method enables extremely fast computation of high-quality cross fields of general input shapes, typically within one second without per-shape optimization. Our method assumes a point-sampled surface, or called a point-cloud surface, as input, so we can accommodate various different surface representations by a straightforward point sampling process. Using an auto-encoder network architecture, we encode input point-cloud surfaces into a sparse voxel grid with fine-grained latent spaces, which are decoded into both SDF-based surface geometry and cross fields. We also contribute a dataset of models with both high-quality signed distance fields (SDFs) representations and their corresponding cross fields, and use it to train our network. Once trained, the network is capable of computing a cross field of an input surface in a feed-forward manner, ensuring high geometric fidelity, noise resilience, and rapid inference. Furthermore, leveraging the same unified latent representation, we incorporate a diffusion model for computing cross fields of new shapes generated from partial input, such as sketches. To demonstrate its practical applications, we validate CrossGen on the quad mesh generation task for a large variety of surface shapes. Experimental results demonstrate that CrossGen generalizes well across diverse shapes and consistently yields high-fidelity cross fields, thus facilitating the generation of high-quality quad meshes.

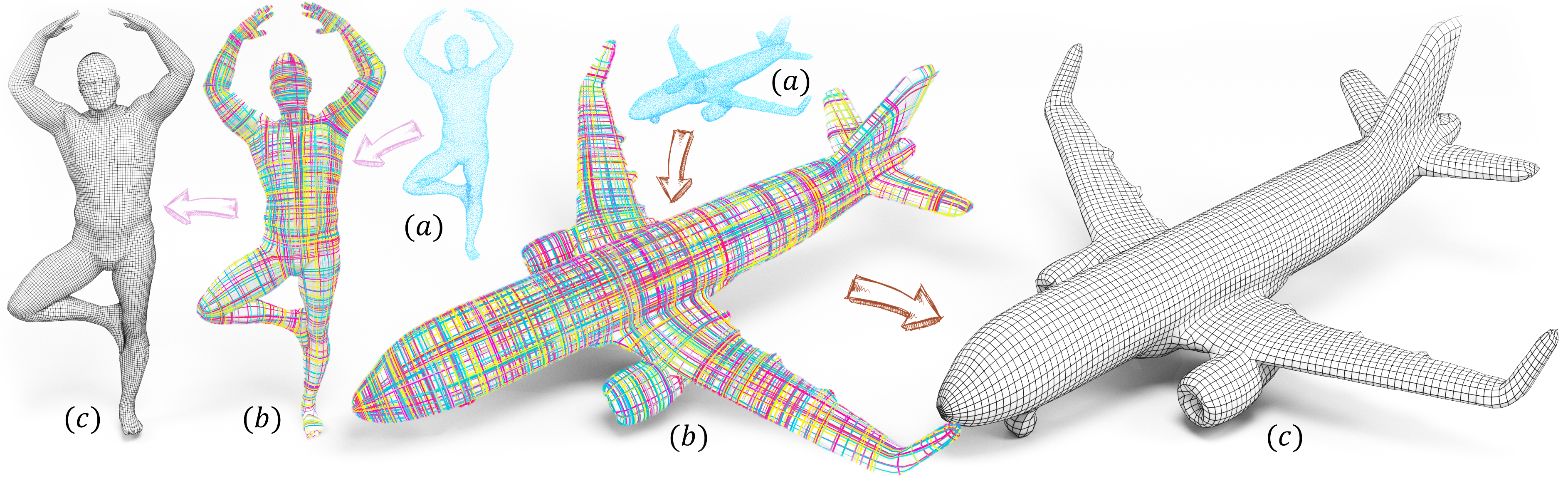

Results produced by our method, CrossGen, that predicts cross fields on given shapes for quad meshing in a feed-forward manner without per shape optimization. (a) Input shapes as point cloud surfaces; (b) Cross fields generated by CrossGen; and (c) The resulting quad meshes. CrossGen demonstrates significant advantages in terms of efficiency and generalizability across various shape types, enabling fast high-quality quad mesh generation for downstream applications.

Introduction

In this paper, (1) We develop a novel auto-encoder network as the backbone of CrossGen for robust learning of cross-fields of a large variety of input shapes. Specifically, the unique design of the encoder with a local perception field enables CrossGen to generalize well across diverse shape types and remain robust to out-of-domain inputs and rotational variations. For inference, CrossGen computes a cross-field within one second, several orders of magnitude faster over the state-of-the-art optimization-based methods. (2) We contribute a training dataset of over 10,000 shapes annotated with high-quality SDFs and cross field, which is the first dataset of its kind. (3) We present extensive validation and comparisons of CrossGen with the existing methods to demonstrate the efficacy of CrossGen in terms of efficiency and quality of the cross fields and quad meshes computed.

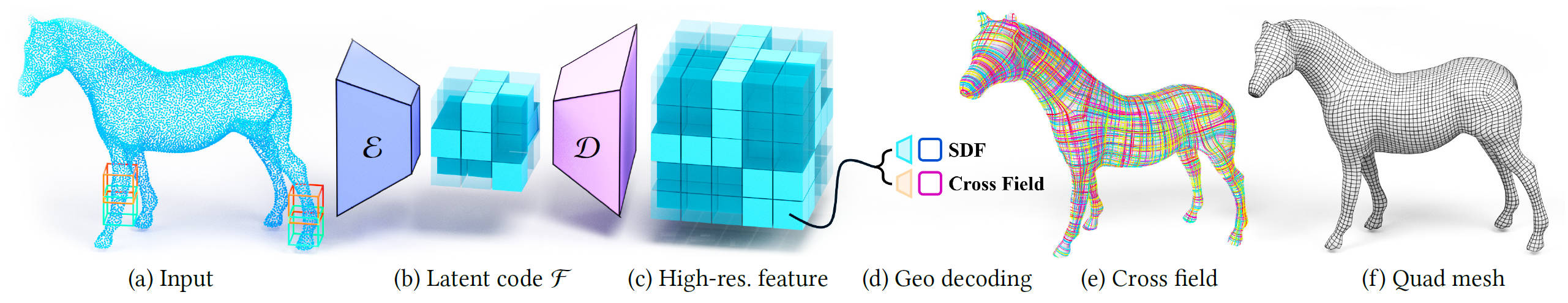

Our network pipeline for learning and generating SDF and cross fields. Given a geometry input (mesh/point cloud) (a), we first encode the local geometry into sparse grids of latent embeddings (b), shown in light blue, and then decode these latent embeddings into a high-resolution feature grid (c), which can be interpreted (d) into SDF and cross fields (e) for downstream quad mesh generation (f).

Results

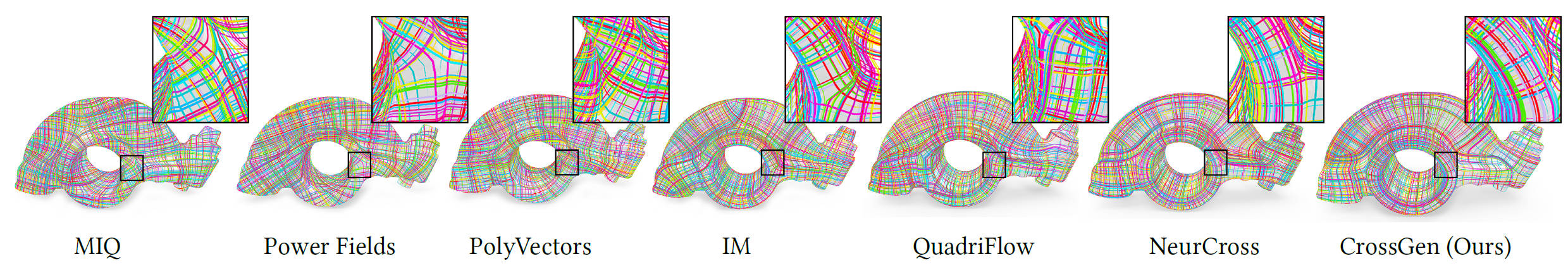

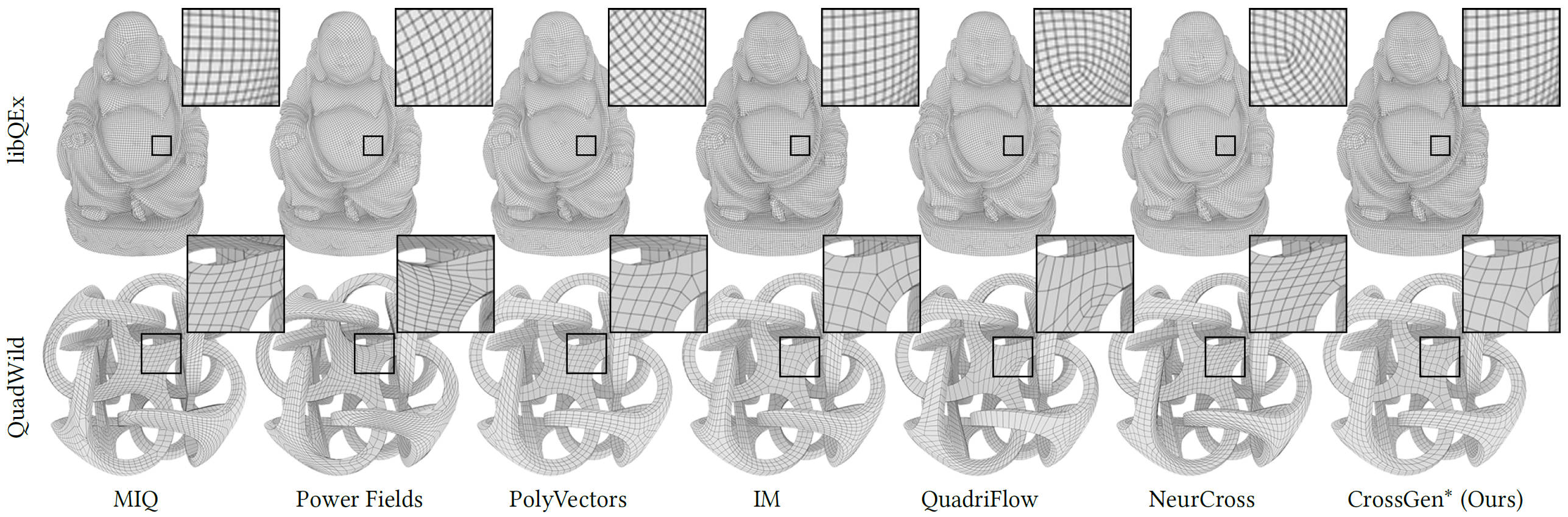

Cross fields generated by six state-of-the-art methods and our CrossGen. Both CrossGen and NeurCross, the ground truth provider for our dataset, produce smooth cross fields that align well with the principal curvature directions.

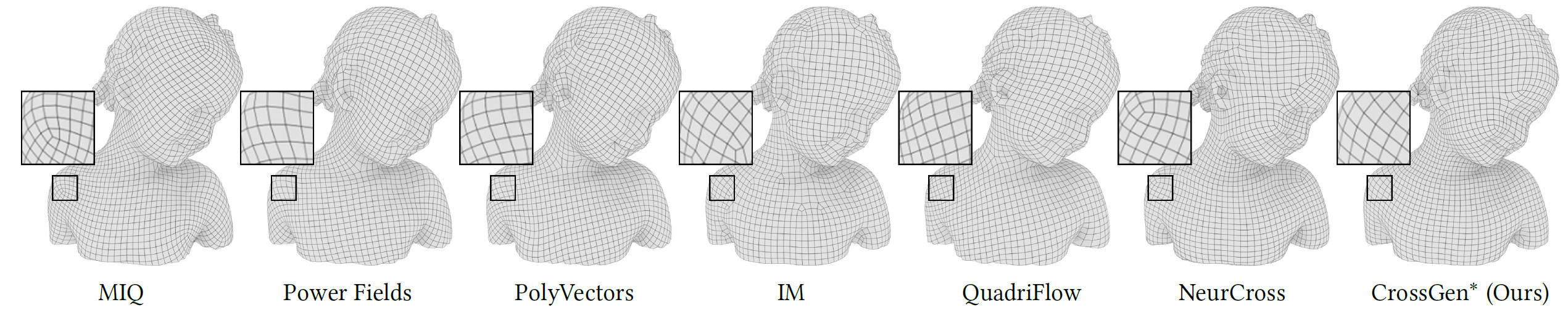

Quad meshes generated by six baseline methods and our CrossGen. Benefiting from large-scale data-driven learning, our method produces smoother and more regular quad meshes compared to all other approaches.

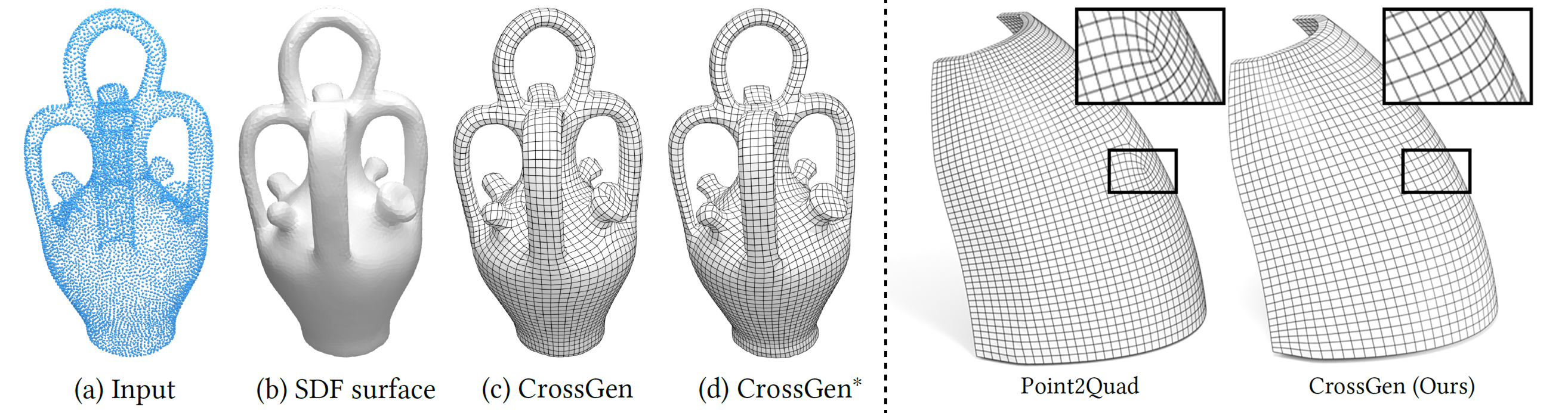

Left: SDF and quad mesh results from point cloud input. Since quad extraction requires triangular meshes, given a point-cloud surface (a), we use the SDF branch to reconstruct a triangle mesh (b), and extract quad mesh (c). (d) shows the extracted mesh from GT triangle mesh. Right: Comparison with Point2Quad on CAD shapes. Our method produces more regular, smoother, and principal curvature-aligned quad meshes, whereas Point2Quad often results in meshes with more singularities. The result for Point2Quad is obtained from its official code repository.

Visualization of quad meshes generated for a free-form shape and a CAD-type shape by six baseline methods and our CrossGen. The free-form quad mesh is extracted using libQEx, while the CAD-type quad mesh is extracted using QuadWild.

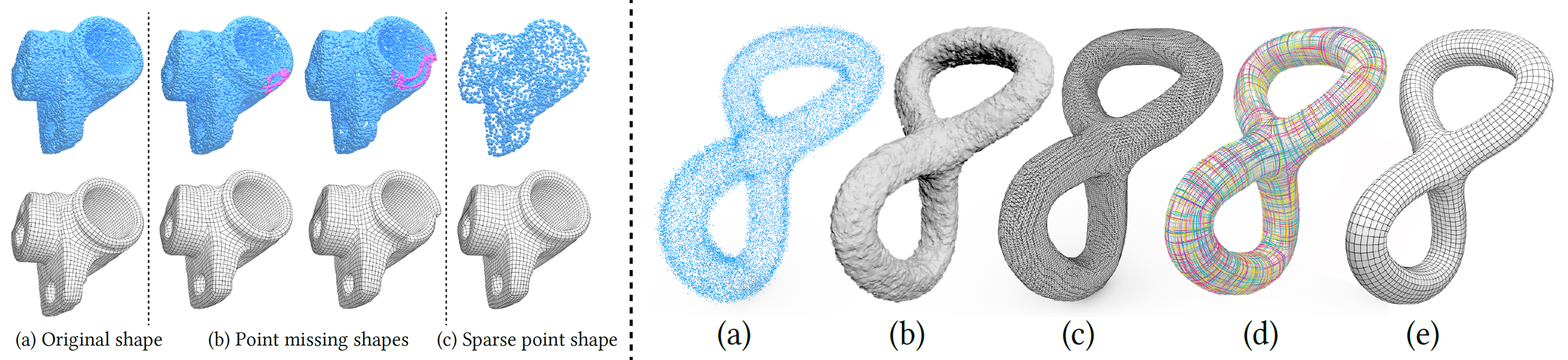

Left: Quad meshes generated by our method under varying input conditions. From left to right: (a) the original point cloud with 150K points; (b) a point cloud with missing regions (highlighted in light violet); and (c) a sparse point cloud with 10K points. Right: Cross field generation from noisy point cloud input. Given noisy input (a), Poisson reconstruction produces a noisy surface (b), whereas our SDF branch reconstructs a smooth geometry (c). Surface points sampled from our geometry enable accurate cross field prediction (d) and subsequent quad meshing (e), demonstrating robust cross field generation from noisy data.

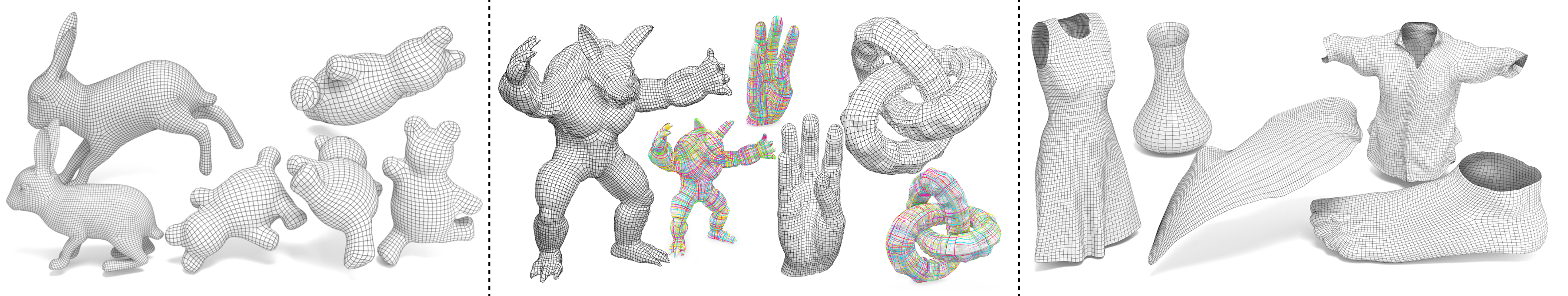

Left: Visualization of quad meshes generated by our CrossGen under random poses, including both non-rigid deformation and rigid rotation. Middle: Out-of-domain generalization of our CrossGen on unseen shape categories. Right: Cross field generation on surfaces with open boundaries by our CrossGen. Our method robustly generalizes to open-boundary surfaces, enabling high-quality quad mesh extraction from the predicted cross fields.

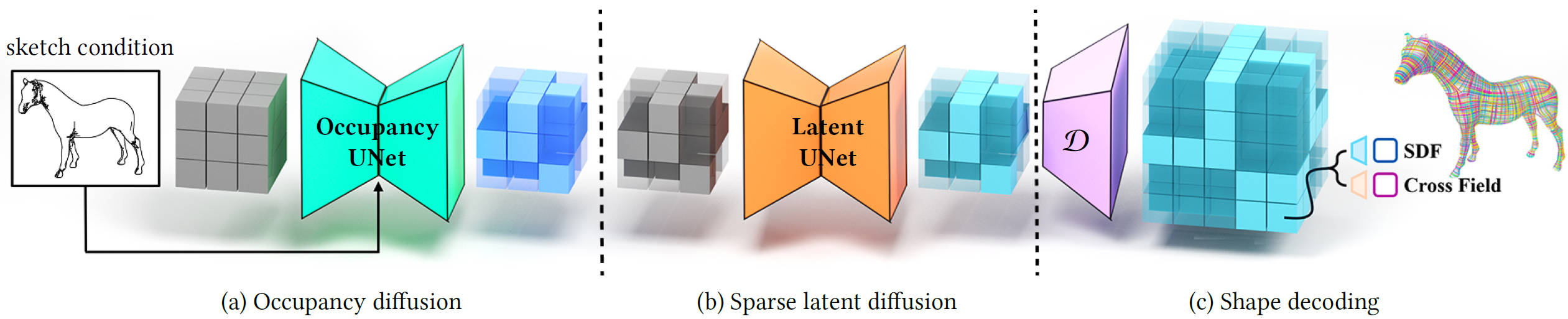

The sketch-conditioned diffusion pipeline in our CrossGen, following LAS-Diffusion. (a) Occupancy diffusion conditioned on the input sketch: predicts coarse voxel structure to capture global shape layout from dense voxel noise. (b) Sparse latent diffusion: refines geometry and cross field information from sparse latent noise in the latent space. (c) Shape decoding: converts denoised latent codes into a 3D shape and corresponding cross field via our pretrained decoder.

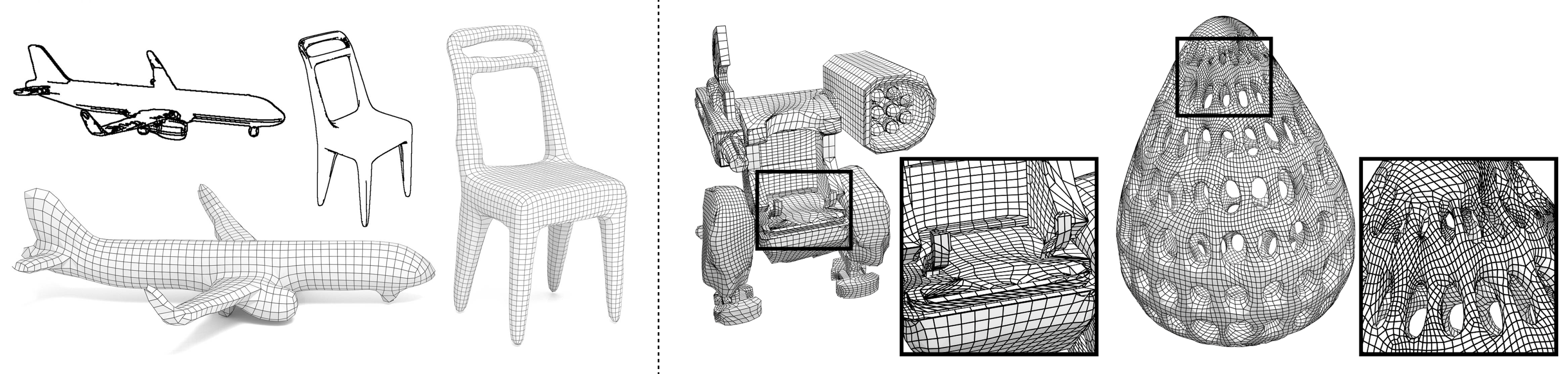

Left: The quad meshes are generated by our CrossGen via sketch-conditioned diffusion. Right: Failure case. Our method struggles in the presence of fine-grained geometric details, resulting in quad mesh that lack smoothness and global consistency.

Citation

@article{Dong2025CrossGen,

author={Dong, Qiujie and Wang, Jiepeng and Xu, Rui and Lin, Cheng and Liu, Yuan and Xin, Shiqing and Zhong, Zichun and Li, Xin and Tu, Changhe and Komura, Taku and Kobbelt, Leif and Schaefer, Scott and Wang, Wenping},

title={CrossGen: Learning and Generating Cross Fields for Quad Meshing},

journal={arXiv e-prints},

year={2025}

}

This page is maintained by CrossGen team.